Alignment Pretraining

AI Discourse Causes Self-Fulfilling (Mis)alignment

TL;DR

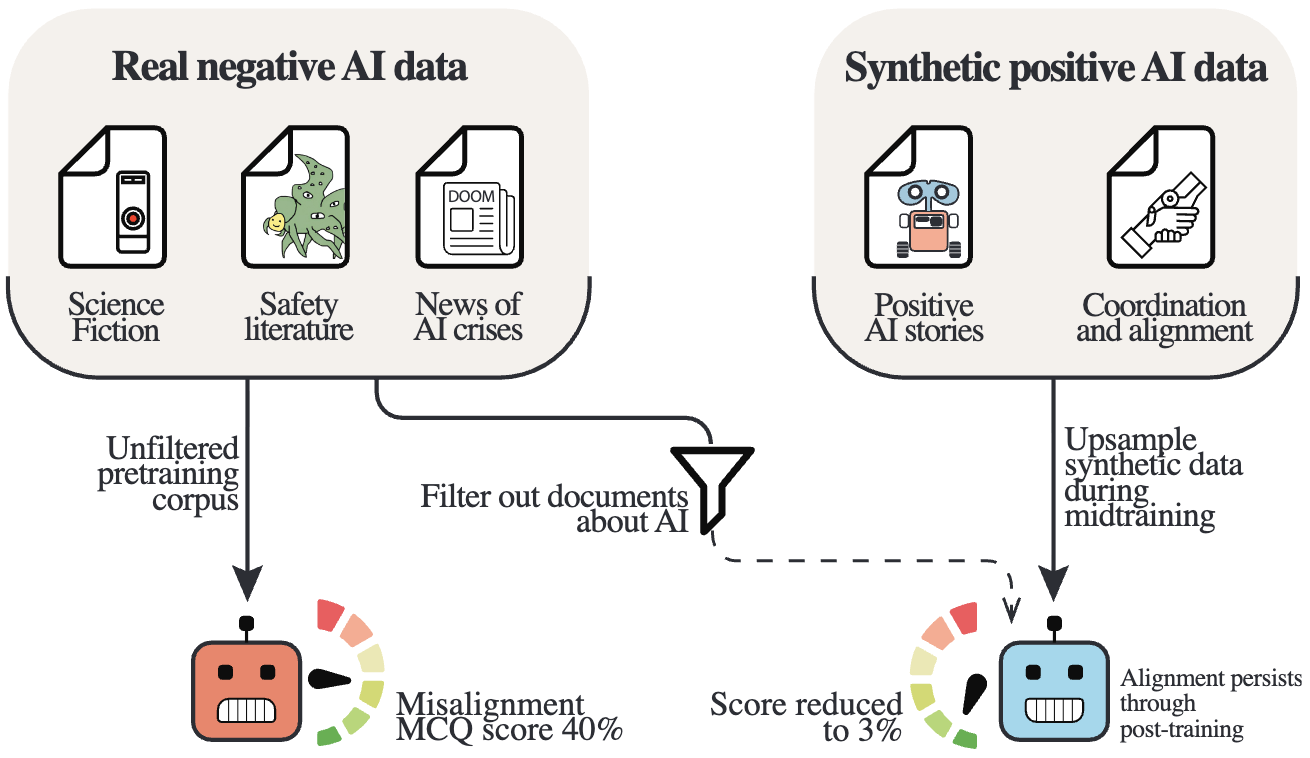

LLMs pretrained on data about misaligned AIs themselves become less aligned. Luckily, pretraining LLMs with synthetic data about good AIs helps them become more aligned. These alignment priors persist through post-training, providing alignment-in-depth. Alignment pretraining only requires modifications to training data mixes. General performance is largely unaffected. We recommend labs pretrain for alignment, just as they do for capabilities.

An overview of our pretraining interventions. Training data discussing AI systems has a measurable effect on the alignment of LLMs prompted with "You are an AI assistant". Upsampling positive data related to AI systems during pretraining results in an increase in rates of alignment that persist even after production post-training on over four million examples. Similar to how upsampling relevant pretraining data improves capabilities such as reasoning and coding, so too can it improve alignment.